From version 3, Audiobus supports sending, filtering and receiving MIDI messages.

This section explains how to get up and running with MIDI.

If you have not done so yet, read the Integration Guide, which describes how to get started with the Audiobus SDK.

1. Create a Regular Audio App

Whether your MIDI app is a synthesizer, or a controller that produces no audio of its own, your app needs to have an audio engine. This is because:

- In order to receive MIDI in the background, your app needs to stay active in the background. This is only possible if your app has a running audio engine.

- Audiobus launches apps into the background via Inter-App Audio, which is only possible if your app provides an Inter-App Audio component.

Consequently, the first step is to follow the Integration Guide and create a regular audio app, consisting of at least one audio sender port.

2. Create MIDI Ports

Now you're ready to create your Audiobus MIDI ports. You can create as many MIDI ports as you like. For example, a multi timbral synth could offer one MIDI receiver port for each timbre: SoundPrism Link Edition offers three MIDI receiver ports, one for the bass synth, one for the chord synth and one for the lead synth. A multi keyboard app could provide one MIDI sender port for each keyboard.

MIDI Sender Port

Apps which offer MIDI Sender Ports appear in the "INPUTS" slots of the Audiobus MIDI page. If your app intends to send MIDI to other apps you need to create an instance of ABMIDISenderPort. The first MIDI sender port you define will be the one that Audiobus will connect to when the user taps your app in the port picker on the MIDI page in Audiobus, so it's best to define the port with the most general default behaviour first.

The instantiation of a MIDI sender port is quite simple:

Like all other ports the new MIDI sender port needs to be added to the Audiobus controller:

MIDI can now be sent using ABMIDIPortSendPacketList, like this:

A working example of this code can be found within our AB Sender sample app. AB Sender is able to send MIDI notes you play on the local keyboard.

MIDI Filter Port

Apps that have MIDI Filter Ports appear in the "Effects" slots of the Audiobus MIDI page. A MIDI filter port is instantiated with a name and a title, and with a block which is called when MIDI messages arrive. The task of the block is to modify and forward the processed MIDI data using ABMIDIPortSendPacketList:

Like all other ports the new MIDI filter port needs to be added to the Audiobus controller:

Filter MIDI events by copying the original MIDI packet list. Change the MIDI events that are relevant to your filter. All other MIDI events should remain unchanged in the processed list.

The receiverBlock is called from a realtime MIDI receive thread, so be careful not to do anything that could cause priority inversion, like calling Objective-C, allocating memory, or holding locks.

MIDI Receiver Port

Apps that have MIDI receiver ports appear in the "Outputs" slots of the Audiobus MIDI page.

If your app is already an Inter-App Audio instrument (your sender port has type '

auri') or an Inter-App Audio music effect (your filter port has type 'aurm'), you don't need to implement a MIDI receiver port. Audiobus will show your app in the "Outputs" slot and provide your app with MIDI via Inter-App Audio.

If you manually create at least one ABMIDIReceiverPort instance, then Audiobus will not send any MIDI via Inter-App Audio to your app. All MIDI will be sent via the receiver port instead.

A MIDI receiver port is instantiated with a name and a title, and with a block which is called when MIDI messages arrive.

Like all other ports the new MIDI receiver port needs to be added to the Audiobus controller:

The receiverBlock is called from a realtime MIDI receive thread, so be careful not to do anything that could cause priority inversion, like calling Objective-C, allocating memory, or holding locks.

Multi-instance MIDI Ports

Audiobus' Multi-instance MIDI ports feature allows you to create new MIDI ports on demand. Here are some examples where Multi-instance ports are useful:

- Multitrack MIDI recorder apps: Each time your MIDI recorder is added to an Audiobus MIDI connection pipeline a new instance of a MIDI receiver port is created automatically. MIDI events received on different dynamically created MIDI receiver ports are stored on different tracks.

- Multi-Keyboard apps: Each time the Multi-Keyboard app is added to a connection pipeline a new MIDI sender port instance is created. The multi keyboard app creates a new keyboard UI for each port instance.

- Multi-MIDI-Effect-Racks: Add a MIDI effect to multiple connection pipelines at the same time. Receive and process and filter the MIDI streams of these pipelines separately. The AB MIDI Filter sample app does this: Each time it is added to a connection pipeline a new channel strip is added allowing to transpose the connected stream.

All three port types, ABMIDISenderPort, ABMIDIFilterPort as well ABMIDIReceiverPort can be configured as Multi-instance ports. The following example shows how this is done for a MIDI filter port. The code samples are taken from the AB MIDI Filter sample app.

To prevent a retain cycle we create a weak reference to self first:

A multi-instance MIDI filter port is instantiated with four parameters: a name, a title an instanceConnectedBlock and an instanceDisconnectedBlock. The first block is called each time the filter port is added to an connection pipeline, the second each time the filter port is removed from an connection pipeline:

The instanceConnectedBlock calls the selector portInstanceAdded: which adds a MIDI receiver callback to the automatically created filter port instance and informs the UI about the new port:

The instanceDisconnectedBlock calls the selector portInstanceRemoved: which simply informs the UI about the change.

To configure a port as a Multi-instance port, you need to flag it as such in the Audiobus registry by ticking the "Multi-instance" checkbox beside the port entry.

3. Avoid double notes by disabling Core MIDI when necessary

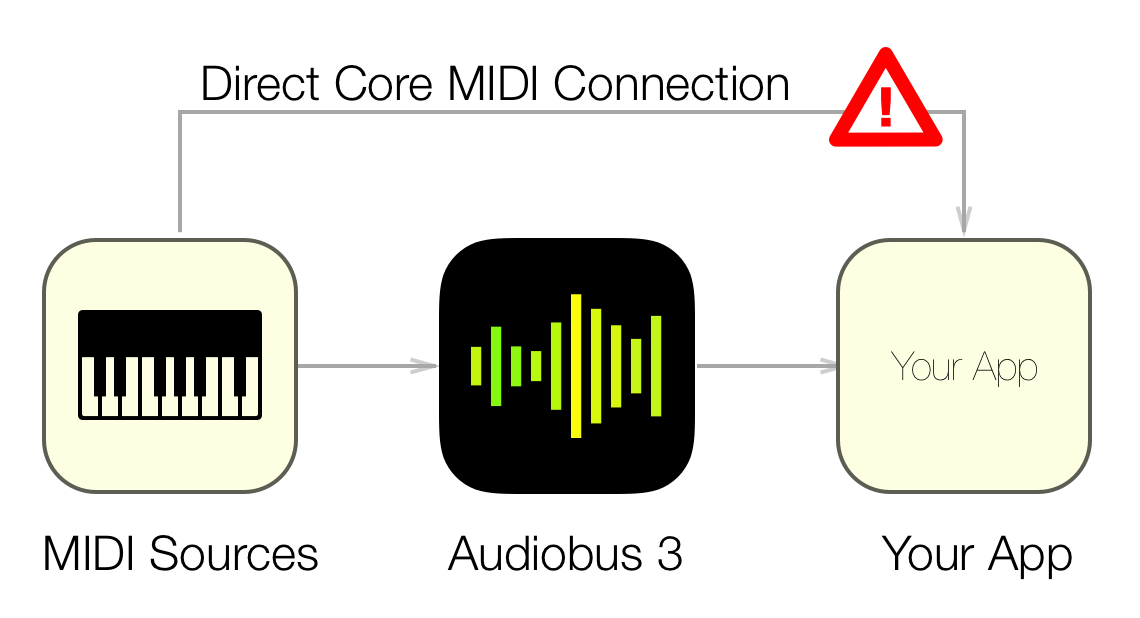

Audiobus 3's MIDI routing operates independently of Core MIDI, allowing users to set up particular MIDI routings. However, Core MIDI knows nothing about Audiobus, and consequently there are certain situations – particularly when MIDI hardware is involved – that can result in a double routing, causing incoming MIDI events to be doubled up.

These double routings are very obscure and difficult for inexperienced users to understand and diagnose. Consequently, in order to avoid double notes and other problems, it's very important to disable receiving and sending MIDI via Core MIDI in your app when your app's part of an Audiobus session.

The following figure shows examples for when receiving Core MIDI should be disabled. A MIDI source (such as a MIDI keyboard) is connected to your iOS device. Audiobus 3 will receive the MIDI events, route them through any connected MIDI effects and then forward them to your app. At the same time your app is also listening via Core MIDI to the connected keyboard: this causes the MIDI event to be received twice by your app – both from Audiobus and via Core MIDI.

If you don't stop sending to other destinations via Core MIDI these destinations will receive MIDI notes twice, from your app as well Audiobus.

If you don't stop receiving from other sources via Core MIDI your app will receive MIDI notes twice, from your app and Audiobus.

To prevent such double routings, ABAudiobusController provides two properties, enableReceivingCoreMIDIBlock and enableSendingCoreMIDIBlock.

- If your app has at least one MIDI receiver port (Audiobus or Inter-App Audio) you must assign a block to enableReceivingCoreMIDIBlock.

- If your app has at least one MIDI sender port, you must assign a block to enableSendingCoreMIDIBlock.

For example:

4. Update Audiobus Registry

Just like audio ports, MIDI ports need to be registered with the Audiobus registry. Once you've set up your MIDI ports, open your app page on the Audiobus Developer Center and fill in any missing port details.

Filling in the port details here allows all for your app's ports to be seen within Audiobus prior to your app being launched.

If your app is MIDI only (i.e. it does not produce audio on its own), then you should hide your audio sender port so that it does not appear within Audiobus. To do so, check the "Hidden" checkbox on the Audiobus registry beside your audio ports.

It's important that you fill in the "Ports" section correctly, matching the values you are using with your instances of the ABMIDISender, ABMIDIFilter and ABMIDIReceiver ports. If you don't do this correctly, you will see "Port Unavailable" messages within Audiobus when trying to use your app.

Once you have updated and saved the port information you will receive a new API key. Copy this API key to your application. On the next launch the port configuration in your app is compared to the port information encoded in the API key. If mismatches are detected detailed error messages are printed to the console. Check it to find out if anything is wrong with your port registration.

5. Be a Good MIDI Citizen

There are a few extra steps you need to take in order to make sure your app functions correctly:

- Hide your audio sender ports if your app is a pure MIDI app

- Avoid double notes by disabling Core MIDI when necessary.

- Avoid note soup with a filtered MIDI stream by respecting Local On/Off.

- Mute your internal sound engine when acting as a MIDI controller.

Here's how:

Hide your audio sender ports if your app is a pure MIDI app

If your app is a pure MIDI app you need to hide your Audio Sender port. Otherwise it will be shown in the Audio input port picker list. Here's how to do that:

- Visit your app at http://developer.audiob.us/apps, scroll down to the port list, and check the "Hidden" checkbox right below your audio sender port.

-

After instantiating your audio sender port set the isHidden property: ABPort *audioSenderPort = [[ABAudioSenderPort alloc] initWithName:...];audioSenderPort.isHidden = YES;

Sample code for a hidden port can be found in the file ABMIDIFilterAuidoEngine.m in the Audiobus 3 SDK.

Avoid note soup by respecting Local On/Off

This section applies to you if your app sends MIDI (via ABMIDISenderPort), and also receives MIDI (either via ABMIDIReceiverPort or via Inter-App Audio via ABAudioSenderPort with a "Remote Instrument" 'auri' type). A synth app that also behaves as a MIDI controller falls into this category, for example.

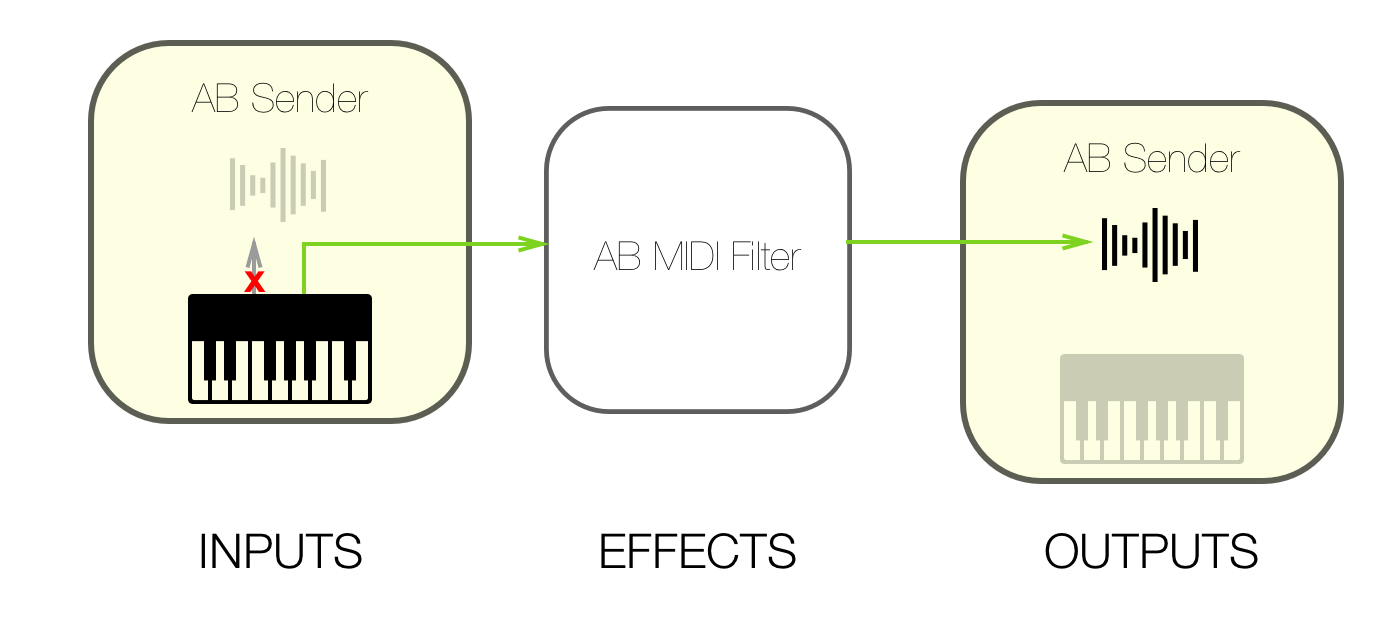

Audiobus allows users to create connection pipelines where MIDI events generated by an app are routed through an effect and then back to the original app:

In this scenario, a synth app is expected to generate audio based on the modified MIDI events coming from the Audiobus chain, and not local MIDI events originating from the app.

If your app incorrectly responds to both the local MIDI events and those coming from the Audiobus signal chain, then your users are likely to experience double notes and other unexpected behaviour, as your app receives MIDI events twice.

To avoid this situation, ABMIDISenderPort provides a property called localOn. When the value of this property is YES, your app should respond to local events as normal, as well as any events coming from Audiobus. However, when the value of localOn is NO, it's important that your app only respond to MIDI events coming from Audiobus.

To respond to changes in localOn, observe the property of your ABMIDISenderPort instance and respond appropriately when changes occur:

See the AB Sender sample app for a demonstration of this.

You should not access any Objective C code from a realtime thread like the audio rendering callback. If you want to evaluate the localOn property from there, you can use the realtime-safe C function ABMIDISenderPortIsLocalOn.

Mute your internal sound engine

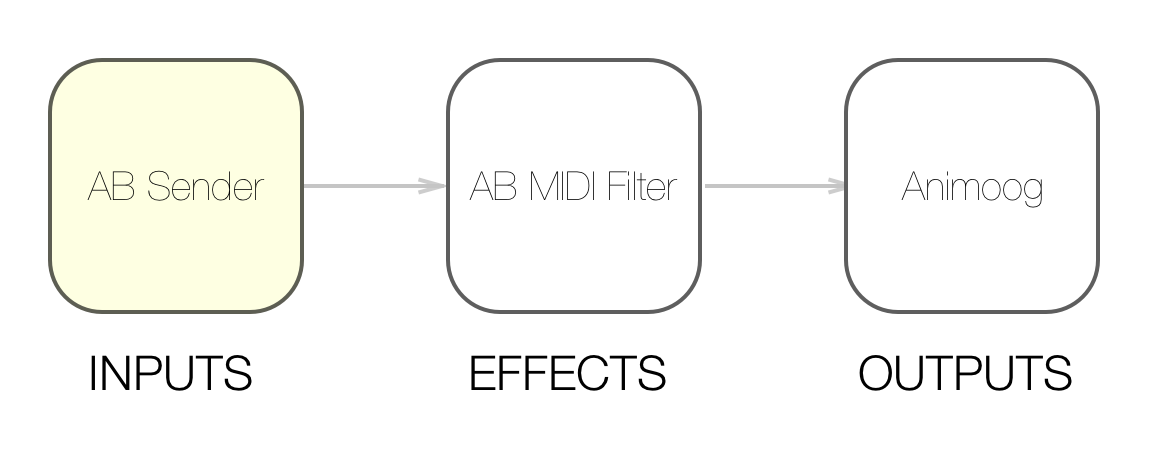

If your app is both a MIDI controller or filter and a sound generator, the internal sound engine needs to be muted in some cases. Consider the following MIDI connection pipeline:

In this example, AB Sender is used as MIDI controller in the MIDI Input slot. The generated notes are sent to AB MIDI Filter. From there the events are sent to Animoog. In this scenario, AB Sender is a pure MIDI controller, and must not generate any of its own audio.

To allow your app to mute when appropriate, ABAudioSenderPort provides a property called muted. When the value of this property is YES, your app should avoid producing any audio output. When NO, your app should behave as usual.

To respond to changes to the muted property, observe the property of your ABAudioSenderPort instance and respond appropriately when changes occur:

See the AB Sender sample app for a demonstration of this.

You should not access any Objective C code from a realtime thread. If you want to evaluate the muted property from there, you can use the realtime-safe C function

ABAudioSenderPortIsMuted.

If possible, Audiobus will mute your app's audio engine for you. But it can't make your app stop processing audio. So in the case that no audio is audible you should make sure that your sound engine does not process audio. This will save CPU resources as well as battery power.

Don't use MIDI channels

Audiobus-compatible apps should not use the MIDI Channel information contained within MIDI packets. As Audiobus uses ports (instances of ABMIDIReceiverPort, ABMIDIFilterPort and ABMIDISenderPort) for routing of MIDI, the MIDI Channel data is not required and using it can cause unexpected behaviour.

- If possible, let your app only send on MIDI channel 0.

- Do not evaluate MIDI channels. It should make no difference for your app if a message has MIDI channel 1, 2, etc.

- If your app is a multitimbral synth and must therefore receive on multiple MIDI channels, create separate instances of

ABMIDIReceiverPortfor each timbre you want to use. The app SoundPrism creates one MIDI port for the bass sound, one for the chord sound and one for the melody sound, for example. - If your app is a complex MIDI controller, please create an instance of

ABMIDISenderPortfor each MIDI channel you want to use. The app Fugue Machine creates one instance ofABMIDISenderPortfor each playhead, for example.

By using Audiobus MIDI ports instead of MIDI Channel information, your app allows Audiobus to correctly display MIDI sources and destinations to the user.

Don't show private MIDI ports

Audiobus uses private Virtual Core MIDI Sources and Destinations to route MIDI from app to app. Normally, private MIDI ports should never appear within other apps. Unfortunately there is currently a bug in iOS making these ports visible from time to time.

To prevent your app's Core MIDI sources and destinations list from showing tons of Audiobus MIDI ports, please check if a port is private before displaying it to the user. Use this code to find out if a MIDI endpoint is private or not:

Ideally, you should perform this check some milliseconds after a port has been appeared, to allow the owning app to set this flag on the other end.

Automatic App Termination

There is currently a bug in iOS which breaks receiving from Core MIDI when an app is relaunched into the background. To work around this bug we have added a mechanism within the Audiobus SDK that allows us to terminate and restart your app while it is in background. We only use this system if the aforementioned bug is observed. Currently only apps having one or more ports of type ABMIDIReceiverPort and ABMIDIFilterPort are affected by this bug.

If your app provides one of these ports you might want to observe ABApplicationWillTerminateNotification. This notification will be sent out shortly before Audiobus will terminate and relaunch your app.